In concurrent programming, a critical section is a portion of code that accesses shared resources (such as variables, data structures, or files) which must be accessed by multiple threads or proces...

In concurrent programming, a critical section is a portion of code that accesses shared resources (such as variables, data structures, or files) which must be accessed by multiple threads or processes. It is critical because if multiple threads or processes execute this section concurrently, it could lead to unexpected behaviour, data corruption, or incorrect results due to race conditions.

Understanding the Critical Section Problem

The critical section problem arises when multiple processes or threads need to access and modify shared resources simultaneously. The main challenge is to ensure that only one process or thread enters the critical section at a time to prevent conflicts.

If not managed properly, this can lead to race conditions, where the system's behaviour depends on the sequence or timing of uncontrollable events, causing unpredictable and erroneous results known as data inconsistency.

For instance, if two threads simultaneously update a shared variable without proper synchronisation, the final value of the variable might be incorrect, leading to potential system failures or incorrect computations.

Race Conditions

A race condition occurs when the outcome of a program depends on the sequence or timing of uncontrollable events, such as the order in which threads are scheduled to run. This can lead to unpredictable and erroneous behaviour in concurrent systems.

Consider two threads attempting to increment a shared counter variable. If both read the counter's value simultaneously and then increment it, the final value will reflect only one increment instead of two, leading to incorrect results.

Race conditions often result in data inconsistency. For example, in a banking application, if two transactions are processed concurrently without proper synchronisation, the final account balance may be incorrect.

To prevent race conditions, synchronisation mechanisms like locks, mutexes, or other atomic operations are necessary. These ensure that only one thread accesses the critical section at a time.

Deadlock

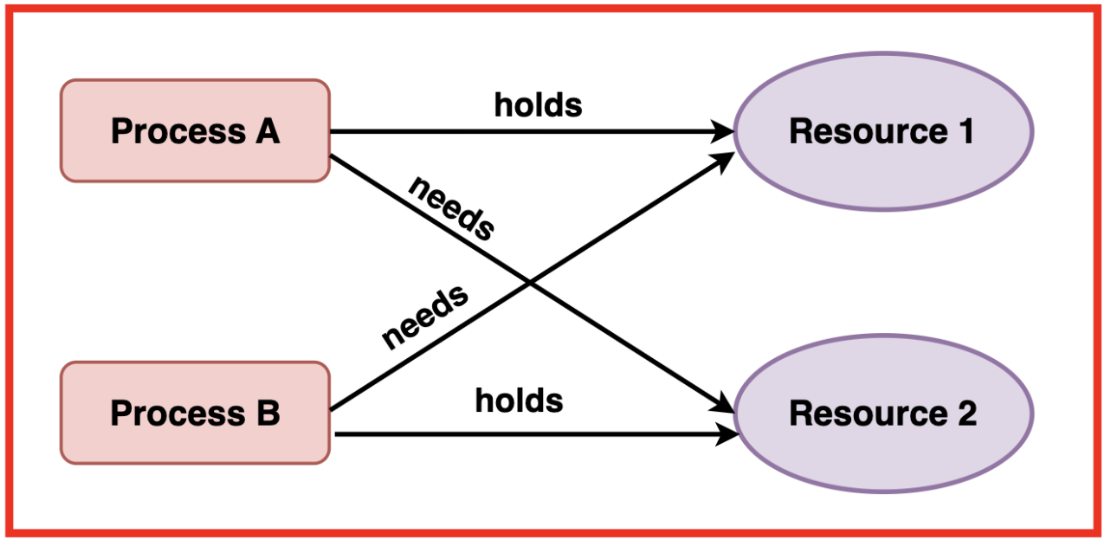

A deadlock is a situation where a set of processes are blocked because each process is holding a resource and waiting for another resource held by another process. The conditions for a deadlock to occur include:

- Mutual Exclusion: At least one resource must be held in a non-shareable mode.

- Hold and Wait: A process must be holding at least one resource while waiting to acquire additional resources.

- No Preemption: Resources cannot be forcibly taken from processes until they voluntarily release them.

- Circular Wait: A set of processes exists where each process waits for a resource held by the next process in the sequence.

To prevent deadlock, multiple strategies can be employed, such as algorithms like the Banker's algorithm, which dynamically examine resource allocation states to ensure that circular wait conditions do not occur. Techniques to detect deadlock and methods to recover from it include resource preemption and process termination.

Starvation

Starvation is a situation where a process is perpetually denied necessary resources to proceed with its execution. It is often caused by priority scheduling, where low-priority processes never get the resources they need if high-priority processes keep preempting them.

To prevent starvation, techniques such as aging can be used, where the priority of a process increases the longer it waits.

Special thanks to Gauri Tomar for contributing to this article on takeUforward. If you also wish to share your knowledge with the takeUforward fam, please check out this article.